Zines on fairness in AI systems: Feels Fair?

How do we ensure that artificial intelligence (AI) makes fairer decisions? This question is becoming increasingly important as AI systems are used more and more frequently in areas such as personnel decisions, the granting of loans or the processing of applications for social benefits. However, it often remains unclear how fair or unfair outcomes arise. This is exactly where our zines come in. This is exactly where our zines come in: They offer a comprehensible introduction to the question of how AI systems can become fairer or unfair. They are aimed at people who do not have in-depth technical knowledge, but require an initial introduction to AI.

Whether in schools, universities or for the general public – our zines offer a clear and practical approach to a complex topic. They provide an initial introduction to the public debate on AI and fairness.

Four zines, four steps to fair AI

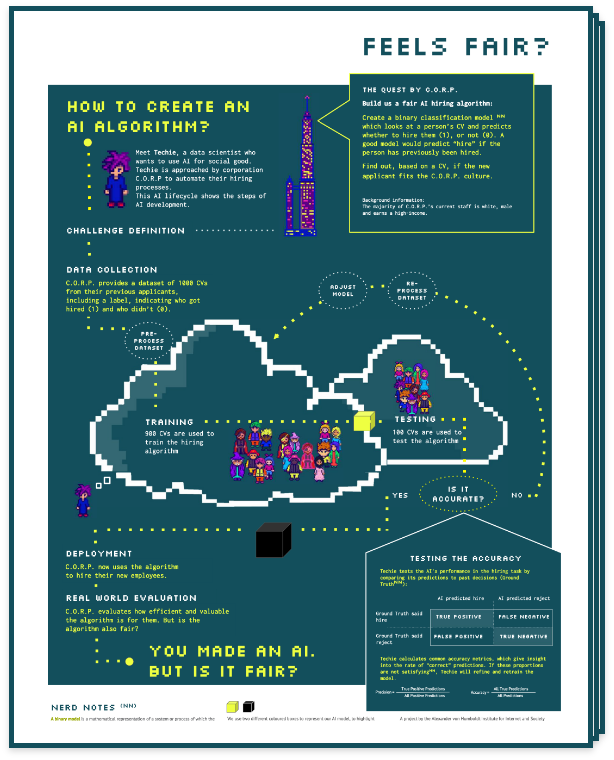

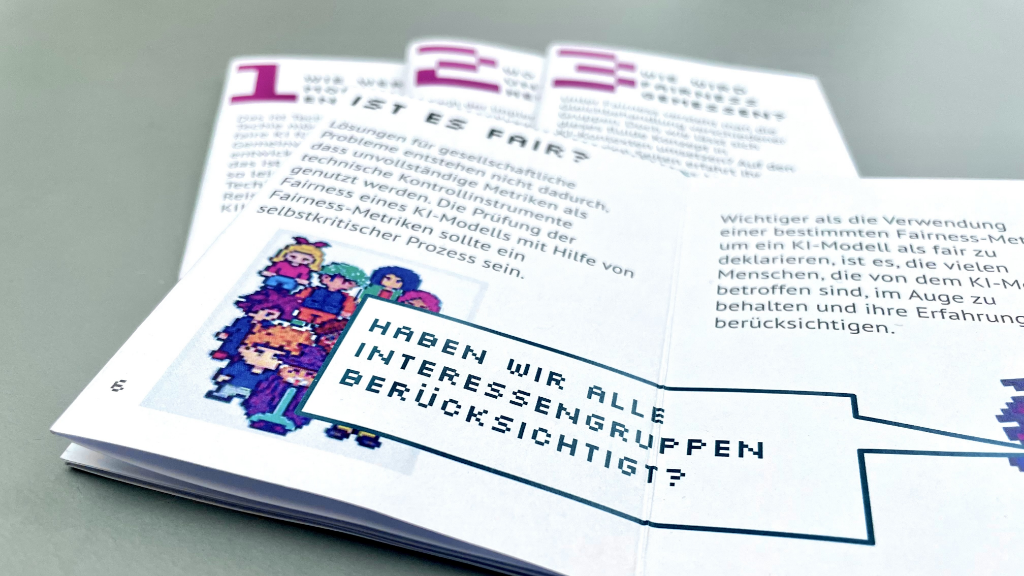

The individual zines highlight the most important aspects of fairness and AI step by step. In four chapters, we accompany Techie, a data scientist, on their journey towards fairer AI systems. The zines explain how AI systems are developed and trained. What biases can influence the decisions made by AI? And which mathematical methods are used to measure fairness?

There are also two posters that illustrate the process of AI development and how fairness metrics work on a larger format. After working through the zines, they will serve as a basis for group discussions.

Why zines?

Zines (pronounced: ‘siiines’, like the end of ‘magazine’) are part of the history of counterculture. They are often artistically designed, self-published small formats that can be printed in low print runs and easily distributed. They fit in every pocket and that is exactly what it should be about when it comes to the dominant discourses of our society. If we want everyone involved and affected to have a say, then the necessary knowledge must also be accessible to everyone.

Why is fairness in AI systems important?

AI systems are increasingly part of decision-making processes that have a profound impact on people's lives. It is therefore crucial that these systems act fairly and do not reinforce existing injustices. One approach often suggested is de-biasing – techniques designed to help remove bias in the data or algorithms. But what exactly does this mean? What are the limits of debiasing and when is an AI system really fair?

Target group and learning effects

Our zines are designed to provide a basic understanding of how AI systems are developed, how they attempt to measure fairness and what role biases play. After actively engaging with them, people will be able to understand and critically evaluate the challenges and limitations of fairness in AI. The materials are aimed at teachers, students, professionals in the field of AI development and policy makers who are concerned with the question of how fairer AI systems can be designed in our society.

AI & Society Lab

This open educational resource is published under the terms of the CC BY-NC-SA 4.0 Licence which permits unrestricted use, provided the original work is properly cited.

The zines are double-sided: there is a poster on one side of the sheet and the pages of the zine on the other. These instructions will help you to fold the zines to turn an A4 sheet of paper into a readable little book. You can open it again at any time to read the poster page.

HIIG is continuously developing a wide range of OER, including a lecture series, a future thinking toolkit and several games.