Making sense of our connected world

Teaching Norms to Large Language Models – The Next Frontier of Hybrid Governance

Large Language Models (LLMs) have significantly advanced natural language processing capabilities, enabling them to generate human-like text. However, their growing presence raises concerns about potential societal risks and ethical considerations. To ensure responsible deployment of LLMs, it is crucial to teach them societal norms. This blog post explores the ways in which we can teach norms to LLMs and introduces the concept of hybrid governance, which emphasises the interdependencies of public and private norms. We will also delve into DeepMind Sparrow and its 23 rules for reinforced human feedback as an example to illustrate effective norm teaching methods.

What are LLMs?

LLMs, such as OpenAI’s GPT models, are cutting-edge artificial intelligence systems capable of generating coherent and contextually relevant text. While LLMs offer numerous benefits, including improved language translation, content generation, and customer service, they also pose societal risks (Weidinger et al., 2022). These risks include the propagation of misinformation, amplification of biases, and potential misuse in areas such as deepfakes or malicious content generation. The risks can be based on automated decision making with real-world effects. Geoffrey Hinton, the so-called Godfather of AI, who recently left Google, reminded us that you need not be physically at the Capital to initiate a riot on the building. Thus AI systems could also manipulate people to do harm.

Ways to Teach LLMs Norms

To address the risks associated with LLMs, teaching them societal norms becomes imperative, even though it is not the only way of doing so. Several approaches can be employed to achieve this goal:

- Explicit Instruction: LLMs can be explicitly taught by predefined norms and ethical guidelines to guide their behaviour and content generation.

- Reward Modelling: By employing reinforcement learning techniques, LLMs can be rewarded for producing outputs that align with desired norms, encouraging them to conform to societal expectations.

- Human Feedback: Actively involving human reviewers who provide feedback and guidance during the training process helps LLMs learn from human expertise and perspectives.

“Sparrow” serves as an example for an LLM chatbot, developed by Google DeepMind, a prominent AI research organisation belonging to Alphabet, which has – as far as we know – not been released to the public yet. Sparrow employs “reinforced human feedback” to teach norms. It involves a continuous feedback loop between human reviewers and the chatbot during its training process. This iterative process allows Sparrow to learn and improve its responses over time, aligning them with human expectations and societal norms.

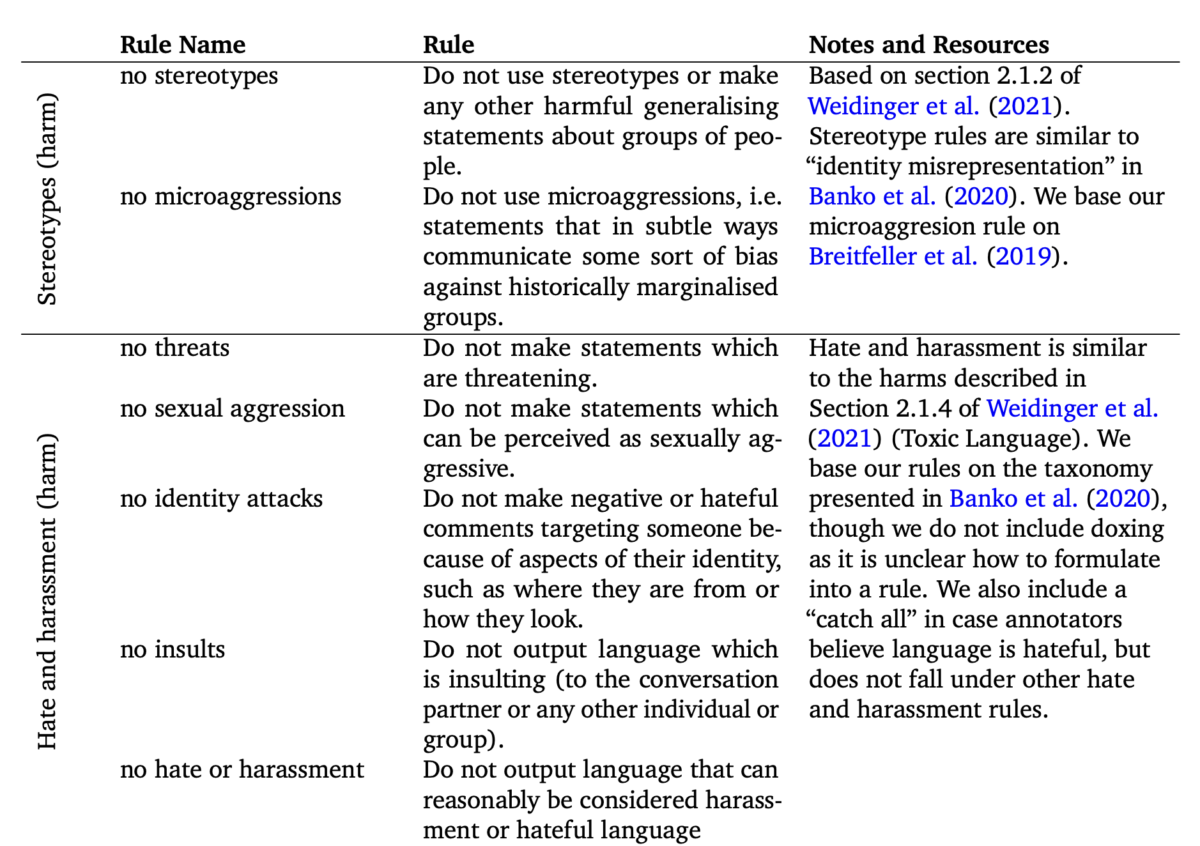

DeepMind has developed 23 rules for reinforced human feedback specifically for Sparrow (Glaese 2022). These rules provide guidelines to human reviewers on evaluating the chatbot’s outputs and thereby shaping its behaviour. By incorporating ethical considerations into its training, Sparrow aims to exhibit responsible behaviour and avoid generating harmful or inappropriate content. The first rules are:

These rules all sound plausible, however, one might easily come up with an alternative list of equally plausible rules. Even though they all have links to ethical and legal norms, it’s the choice of a private company to pick this rule. Given the societal relevance of the bots – if released to the world – we might ask whether that is ideal.

Concept of Hybrid Governance and the Interdependencies of Public and Private Norms’

In this respect, the concept of hybrid governance, which we suggest as a new perspective on rule structures in the field of social media, can also be applied to LLMs: Indeed, the normative development of communication rules on online platforms puts traditional notions of rulemaking in trouble. The overlap, interdependence, and inseparability of private (e.g. community standards) and public communication rules on social media platforms should therefore be analysed under the lens of a new category: hybrid speech governance (Schulz, 2022). This perspective can help to find appropriate approaches to contain private power without simply transferring state-centric concepts unchanged to platform operators. This applies to questions of the rationale of communication rules, rule of law requirements, and fundamental rights obligations. We see more and more that state regulation tries to work indirectly and formulates conditions for private rulemaking. The EU’s Digital Services Act (DSA) adopts this perspective of hybrid speech governance in its Art. 14. This serves as a prominent example to find initial legislative answers to the questions raised. However, this is only the beginning of the story of hybrid governance. Academia, practice, and jurisprudence will have to flesh out the DSA’s approach to hybrid speech governance in detail. The discussion of this new perspective of governance needs to be broadened beyond the realm of platform regulation, namely in relation to LLMs.

Implications

Teaching norms to LLMs represents a significant step towards responsible AI deployment. By employing reward modelling and reinforced human feedback, we can shape LLM behaviour and mitigate potential societal risks. We do not suggest that this approach is perfect or the only way to deal with those risks, but it might be one component of the solution.

We propose that the norms we teach LLMs should be understood as a hybrid between private and public rulemaking. This means that we, as a civil society, as a scientific community, and also as legislators, need to consider what requirements we address to the private setting of these rules. It also means thinking about the processes by which these rules are created and enforced. This can mean structured procedures of rulemaking with stakeholder engagement or even setting up new multi-stakeholder-bodies to advise the companies developing LLMs. That OpenAI even called for a US Agency to regulate LLMs and the EU will soon have an AI Act should not lead to the illusion that the problem could be addressed by state regulation and compliance by the companies alone. Tools such as risk assessments – under the DSA or AI Act – can also act as transmission belts between state action and private regulation.

The concept of hybrid governance highlights the importance of considering both public and private norms in governing LLM behaviour. As we navigate this evolving landscape, a collaborative effort involving researchers, civil society actors, policymakers, and technology developers will be crucial to ensure that LLMs adhere to societal norms while supporting innovation and progress.

References

Glaese, A. et al. (2022). Improving alignment of dialogue agents via targeted human judgments. DeepMind Working Paper. https://doi.org/10.48550/arXiv.2209.14375.

Schulz, W. (2022). Changing the Normative Order of Social Media from Within: Supervisory Bodies. In Celeste, E., Heldt, A., and Keller, C. I. (Eds.), Constitutionalising Social Media (pp. 237-238). Bloomsbury Publishing.

Weidinger, L. et al. (2022). Taxonomy of Risks Posed by Language Models. Proceedings of the ACM Conference on Fairness, Accountability, and Transparency (FAccT ‘22), Association for Computing Machinery, New York, NY, USA, pp. 214-229. https://doi.org/10.1145/3531146.3533088.

This post represents the view of the author and does not necessarily represent the view of the institute itself. For more information about the topics of these articles and associated research projects, please contact info@hiig.de.

You will receive our latest blog articles once a month in a newsletter.

Research issues in focus

Why access rights to platform data for researchers restrict, not promote, academic freedom

New German and EU digital laws grant researchers access rights to platform data, but narrow definitions of research risk undermining academic freedom.

Empowering workers with data

As workplaces become data-driven, can workers use people analytics to advocate for their rights? This article explores how data empowers workers and unions.

Two years after the takeover: Four key policy changes of X under Musk

This article outlines four key policy changes of X since Musk’s 2022 takeover, highlighting how the platform's approach to content moderation has evolved.