Making sense of our connected world

Autonomous weapons – reality or imagination?

How autonomous are autonomous weapons? Do they exist in reality? And in what ways do fictional characters like the Terminator shape how we think about this technology? These questions were at the centre of the HIIG conference Autonomous Weapon Systems – Realities and Imaginations of Future Warfare. Christoph Ernst (University of Bonn) and HIIG researcher Thomas Christian Bächle give an overview of what we can learn about technical autonomy from looking at these weapons systems.

More on this topic in our podcast with Frank Sauer:

Drones have become emblematic for a development that keeps the military, politics, industry and civil society on edge: the autonomisation of weapons systems. This unwieldy term denotes the plan to equip weapons with the capabilities to carry out missions independently. There is an obvious contradiction in the military’s assurance that humans can retain control over so-called autonomous weapons at all times: these systems can not only navigate independently, but can also carry out military actions that are independent of human decision-making ability, such as launching an incoming missile. In principle, they are capable of injuring and killing people, which is why the abbreviation AWS (for “autonomous weapon systems”) is often preceded by an L (for lethal; LAWS), highlighting their deadly functions. The decisions made by computer systems based on so-called artificial intelligence may have serious consequences. How momentous they can be in combat is subject to controversial debates. At the same time this raises a number of unresolved questions.

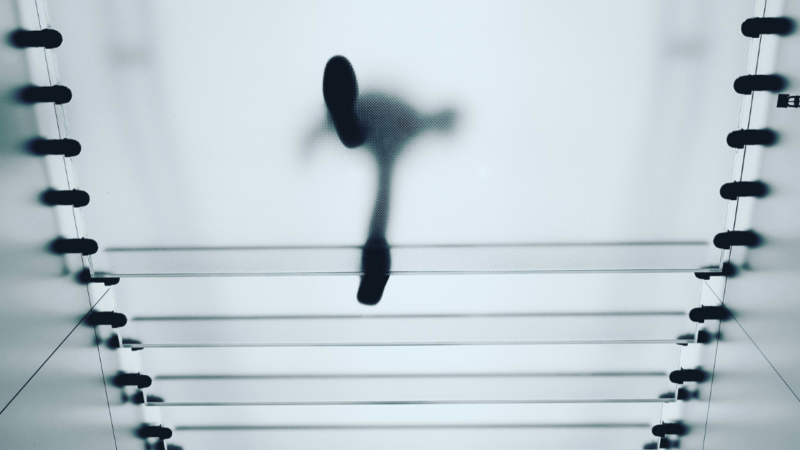

It is astonishing that there is no consensus on when weapons should be considered autonomous and in what respect they differ from automatic weapons. A vast number of highly automated weapons systems have long existed. The most-cited examples include the American Phalanx (see illustration) or the German Mantis system, both of which are used for short-range defence. These weapons are designed to destroy or incapacitate fast-approaching missiles with a rapid-firing system that is triggered automatically. Both are stationary systems that execute predefined processes. A technical system is referred to as autonomous when human control is not required and it can move and adapt to dynamic and unstructured environments for a longer period of time after having been activated. The definitions of what an AWS is are undetermined and vague at best. What is certain, however, is that the autonomy of weapons has little to do with human autonomy, which rather alludes to the ability to give oneself rules and obtain freedom as a result.

But despite the inappropriateness of the comparison, no expert discussion of AWS fails to refer to the concept of human autonomy. For example, a position paper published in April 2018 by the Chinese group of governmental experts to the UN Convention on Certain Conventional Weapons states that the autonomy of such systems should be understood, among other things, as a complete “absence of human intervention”: according to the characterisations in this paper these weapons are impossible to switch off and should even be capable of “evolution, meaning that through interaction with the environment the device can learn autonomously, expand its functions and capabilities in a way exceeding human expectations”. These definitions explicitly establish associations with human autonomy. Their political relevance does not result from the call for the development of systems that are autonomous in this sense. Rather, this particular understanding of autonomy presumably aims to distract from already existing weapons systems which – although not fulfilling the criteria of human autonomy – can very well act independently.

The frequent references to science fiction scenarios, such as the autonomy of the killer robot in the Terminator films, very effectively shape collective notions about autonomous weapons. They are misleading and are commonly rejected in expert debates. Still, even in those expert discussions, the assessments of what is feasible or real in the context of LAWS is much more overshadowed by fictitious speculations about potential scenarios even when experts express a desire to avoid such comparisons. In this sense, the concept of autonomy helps to bring together the two very contradictory aspects: on the one hand, it offers the possibility of classifying factual weapons systems (technical autonomy) and, on the other hand, of indicating the purely fictional expectations towards weapons technology (personal, human autonomy).

This is why the discussions on LAWS and their regulation are only a small part of a much larger debate about technical autonomy in general: the ongoing development of technical autonomy – in which it may acquire capabilities that are actually interpreted as characteristics of human autonomy – is currently perceived as an inevitable course of future development. The particular attention that is currently given to lethal autonomous weapon systems, both in expert communities and the general public, is the result of this circumstance. It can be ascribed to the dubious expectation that intelligent technical systems will be able to learn and will be equipped with free will and a consciousness of their own.

A real weapons system – the PHALANX close-in weapon system (left) – can be imaginatively transformed into a terminator step by step (centre, the terminator T-1; right, the T-800, screenshot obtained from James Cameron’s Terminator 2; USA 1991). The transformation of a weapons system, which became known in the early 1980s, is fictionally narrated using visual representations. Science fiction is instrumental in shaping today’s cultural ideas of LAWS. These can only be fully grasped if the anticipation shaped by imaginations on technical possibilities is taken into account.

The reality of LAWS is permeated by expectations on what is possible. Their social, political, legal or ethical meanings must be seen as an expression of diverse social imaginative processes that are equally influenced by expert discourses and popular culture. The primary task of these imaginaries is to give shape to an anticipated future: it may become the foundation of efforts to technically realise it and at the same time guides the critical debates. In addition to the realities of technical functionality, i.e. the factuality of LAWS, it is therefore always necessary to question the imaginations on LAWS. What rhetorical means are used? What fears are addressed? What are the intentions and purposes?

Only by analysing these imaginations will we be able to fully grasp the reality of a technology that has not yet been actually realised.

The authors: PD Dr. Christoph Ernst is media scientist at University of Bonn focusing on diagrammatics, information visualisation, implicit knowledge, digital media (especially interfaces) as well as media change and imagination. Dr. Thomas Christian Bächle is head of research programme the evolving digital society at HIIG.

Further References

Altmann, Jürgen/Sauer, Frank (2017): »Autonomous Weapons Systems and Strategic Stability«, in: Survival 59, 5, S. 117-142.

Ernst, Christoph (2019): »Beyond Meaningful Human Control? – Interfaces und die Imagination menschlicher Kontrolle in der zeitgenössischen Diskussion um autonome Waffensysteme (AWS)«, in: Caja Thimm/Thomas Christian Bächle (Hg.): Die Maschine: Freund oder Feind? Mensch und Technologie im digitalen Zeitalter, Wiesbaden: VS Verlag für Sozialwissenschaften, S. 261-299. DOI: 10.1007/978-3-658-22954-2_12

Suchman, Lucy A./Weber, Jutta (2016): »Human-Machine-Autonomies«, in: Nehal Bhuta u. a. (Hg.): Autonomous Weapons Systems. Law, Ethics, Policy, Cambridge & New York, NY: Cambridge Univ. Press, S. 75-102.

References to the illustrations

Illustration 1: Phalanx; sourced from: https://en.wikipedia.org/wiki/Phalanx_CIWS#/media/File:Phalanx_CIWS_USS_Jason_Dunham.jpg| Last accessed on 14.08.2019

Illustration 2: Terminator T-1; sourced from: https://terminator.fandom.com/wiki/File:14989_0883_1_lg.jpg | Last accessed on 14.08.2019

Illustration 3: Screenshot from Terminator 2 – Judgement Day (1991); sourced from: https://terminator.fandom.com/wiki/Minigun | Last accessed on 14.08.2019

This post represents the view of the author and does not necessarily represent the view of the institute itself. For more information about the topics of these articles and associated research projects, please contact info@hiig.de.

You will receive our latest blog articles once a month in a newsletter.

Artificial intelligence and society

Beyond Big Tech: National strategies for platform alternatives

China, Russia and India are building national platform alternatives to reduce their dependence on Big Tech. What can Europe learn from their strategies?

Counting without accountability? An analysis of the DSA’s transparency reports

Are the DSA's transparency reports really holding platforms accountable? A critical analyses of reports from major platforms reveals gaps and raises doubts.

Emotionless competition at work: When trust in Artificial Intelligence falters

Emotionless competition with AI harms workplace trust. When employees feel outperformed by machines, confidence in their skills and the technology declines.