Research in Focus

Artificial intelligence and society

Artificial intelligence (AI) unveils a world where the capabilities of technical systems are similar to those of human intelligence. But, AI isn't just about algorithms; it's deeply interwoven with our society. The future of AI technologies is strongly interlinked with the automation of social processes and will touch every facet of our lives: From tailoring your social media feeds to driving innovations in healthcare and even climate research. It's beyond just the screens we scroll; it’s in our offices, our hospitals, our roads, and even in the cutting-edge robotic systems we design. Our research investigates the interplay of AI within the political, social, and cultural landscapes, and explores the impact of AI discourses on society.

The manifold benefits AI-systems are evident as we witness its pervasive impact on various domains. For example, technological automation is helping to evaluate large amounts of data and thus to generate new knowledge. Further examples include the analysis of diagnostic images in medicine, and the pattern recognition or analysis of voice, visual, or text data.

AI within our Society

AI systems are poised to undertake tasks that were once the exclusive domain of human capability. This intent to create proximity of AI technologies to the nature of human beings is illustrated through the widespread use of technical terms like "machine learning" or "neural networks", where the capabilities of technical systems are linked to rational intelligence similar to that found in humans.

Today, AI systems are used to support or replace humans in everyday life, and are associated with an increasing automation of social processes. This shift prompts critical reflections on many levels about AI in society, encompassing questions about accountability, autonomy, and trust.

As we contemplate the dynamic between AI and society, it's clear that the impact of artificial intelligence extends far beyond technology.

AI Systems for Public Good

At HIIG we are particularly interested in systems serving the public interest. In our research, we explore what public interest AI means in political theory, what challenges these projects face globally, and how AI systems with this purpose should be built and governed. The question of how AI affects society resonates deeply, urging us to explore the multidimensional impact of public interest AI on society. With the publicinterest.ai interface, our aim is to increase the data and discourse on public interest AI systems worldwide to increase exchange and share standards and learnings on how they are shaping our society.

Different traditions of thinking about AI

The imaginaries associated with AI also play a major role in shaping the societal implications of these technologies. What is associated with the term AI varies considerably from culture to culture, which is why in one of our projects we compare AI-related controversies in different countries, their media and policy-making. In more theoretical work, we investigate how different traditions of thought in European and Asian countries shape the ways AI is interpreted as both a risk or a solution.

Relatedly, it is impossible to think about artificial intelligence or artificial humans without creating particular ideas about what is human in the first place. In our research we pay particular attention to the relationship between humans and machines, which is strongly related to questions on machine autonomy.

In summary, it is crucial for us to further deepen our understanding of the complex relationship between AI. Our research emphasises the need for an informed dialogue to collaboratively shape the role that AI will play in our future.

MAKING SENSE OF THE DIGITAL SOCIETY

Louise Amoore: Our lives with algorithms

Explore how machine learning algorithms are radically changing the way we find meaning in society.

MAKING SENSE OF THE DIGITAL SOCIETY

Judith Simon: The ethics of AI and big data

How exactly can fundamental rights and moral values be taken into account when developing various AI systems?

Digitaler Salon

AI – The last one is cleaning up the internet

How is AI actually negotiated in our society, and do we need more information about the use and handling of AI?

Feature with Theresa Züger about the use of artificial intelligence for the common good

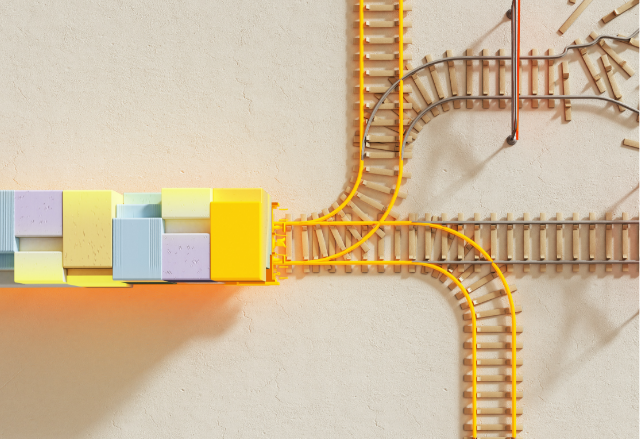

One step forward, two steps back: Why artificial intelligence is currently mainly predicting the past

While AI is seen as technology of the future, it often relies on historical data. This blog post examines how AI can reproduce social inequalities and bias.

From theory to practice and back again: A journey in public interest AI

This blog post reflects on our initial Public Interest AI principles, using our experiences from developing Simba, an open-source German text simplifier.

AI under supervision: Do we need Humans in the Loop in automation processes?

Automated decisions have advantages but are not always flawless. Some suggest a Human in the Loop as a solution. But does it guarantee better outcomes?

One small part of many – AI for environmental protection

What role does AI play in applications for environmental protection? This blog post takes a look at six German projects that use AI for this purpose.

EU AI Act – Who fills the act with life?

EU AI Act: Tomorrow's AI will be decided by authorities and companies in a complicated structure of competences.

Participation with Impact: Insights into the processes of Common Voice

What makes the Common Voice project special and what can others learn from it? An inspiring example that shows what effective participation can look like.