Making sense of our connected world

Same same but (not so) different

Anonymisation is advertised as a privacy solution, while machine learning is dangerous and evil – but these operations have more in common than usually assumed.

Over the past few years, we as computer scientists working in the field of privacy, surveillance and data protection have been wondering: why are anonymisation and machine learning treated so fundamentally different in the discourse, while they share more common features than they differ? Why is anonymisation regarded as the solution, while machine learning is problematised? We want to shed some light on the main characteristics they share and reflect upon their different treatment in both academic and public discourses.

We will take a closer look at three dimensions of characteristics: the machine’s input and output, the question whether the function at stake is reversible, and the issue of bias.

Input and Output

Mathematical functions, just like computers, take an input and map it onto a certain output. For example, while we write this very blog post, our computers take the typing of the keyboard as input and map it to the corresponding characters on the computer screen as output. Or take the conversion of units: 0° Celsius as input is mapped onto 32° Fahrenheit as output. Anonymisation and machine learning algorithms can likewise be characterised by their input and output. Let’s have a closer look:

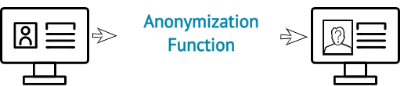

What is anonymisation? In order to answer that question in a way that is appropriate for this blog post, we will first leave aside any technical specifics such as whether k-anonymity, l-diversity, t-closeness or even some “magic x-anonymiser” is used (for an easy introduction to these concepts, see Wikipedia). We simply refrain from asking how “personal data” is actually defined – instead, we assume that there is only personal data and non-personal data, which can be clearly distinguished from each other, and that there is no third kind of data. With these abstractions, we can capture anonymisation as an operation that takes personal data as input and produces anonymised, and therefore non-personal data as output:

And machine learning? Machine learning algorithms operate on training data as input and generate mathematical models as output. Those models are based on a generalisation of the input data and allow for performing tasks like making predictions about the future, without explicitly being programmed to do so. All they are programmed for is to find patterns and make decisions based on those. Their ability to “foresee” the future is ascribed to the algorithms only by their users. Again, we deliberately refrain from going into technical detail like categorising the learning methods or classifiers that the machine learning algorithms use (again, Wikipedia can give you an introductory overview).

The input data that trains machine learning algorithms can either consist of personal data, non-personal data, or both. While robots that write articles for the sports section are trained on non-personal data, recommender systems in online shops learn from personal data. For the sake of the argument, we will concentrate on applications using the latter.

A necessary byproduct of generating the mathematical model as output is that the personal data that served as input is generalised and thereby, more often than not, turned into non-personal data:

Reversibility

With functions and operations, we always wonder whether there is a possibility to map the output back onto the input, whether the function is reversible or – in mathematics lingo – invertible. For example, we can convert the 32°F back to 0°C. Can we do that with non-personal data, too, and convert it back to the original personal data?

Yes, it’s theoretically possible. With regard to anonymisation, that inverse function is called de-anonymisation or, at times, data re-identification. Reversibility is also discussed in the machine learning field, but under many different terms. Common terms include “model inversion” or “membership inference”.

In both cases, the inversion of the anonymisation function or the reversibility of the machine learning model can be prevented by the right design and implementation, depending on the context and data of use.

Bias: what goes around, goes around, goes around, comes all the way back around

It is widely known and problematised in the public discussion that machine learning models inherit the bias of their input data. For example, the recidivism prediction software COMPAS, which is used to predict future criminals, has been shown to be biased towards Black people and has sparked a major public debate in 2016.

When we take a closer look at anonymisation, we can observe the same, although this is barely a subject of discussion: if the input data is biased, anonymisation produces non-personal data exhibiting a similar bias, at times even the very same one. Imagine an entirely anonymised job recruitment process, where the incoming applications are anonymised and evaluated against the likewise anonymised and “successful” applications of people who have actually been hired. If the company has hired mainly men thus far, the recruitment process will be biased towards men, no matter the anonymisation. This comes as no surprise, as it is the engineer’s main goal – besides anonymising the data, of course – to keep the statistical properties. And technically, that’s what a bias is in statistics: just a property.

Discourse Treatment

Though anonymisation and machine learning share similar characteristics, the academic and public discourse focuses on them in fundamentally different ways:

The discourse on model generation in machine learning is largely concerned with the input dimension of the system, which is, by and large, completely ignored in the anonymisation discourse. As a result, machine learning is criticised for operating on personal data and considered to threaten privacy or data protection, while there is almost no criticism on anonymisation operating on a similar input. On the other hand, anonymisation is widely heralded for producing non-personal data as output, while the output of machine learning is not much of an issue in the debate. Thus, the discourse on machine learning has a strong fixation on the input, the anonymisation discourse is essentially output-focused.

The debate on anonymisation has long since accepted that, in general, the possibility for de-anonymisation exists. Whether data can be properly – meaning irreversibly – anonymised, is dependent on the context and the purpose of the processing as well as on the particular input data. While the discourse has therefore moved towards a fine-grained and context-specific evaluation of both the merits and the feasibility of anonymisation, the reversibility of machine learning models is still being treated as a coarse-grained issue: either it’s reversible, or it’s just not.

The bias of the data, and consequently the models, predictions and decisions built upon them, is one of the biggest concerns in the discourse on machine learning. It has sparked major debates about the application of machine learning techniques in different fields and even led to calls for banning them in some sectors or regarding particular social groups. At the same time, the bias issue is completely – and thus remarkably – absent from the anonymisation debate, even though anonymised data is as biased as the personal data that was put into the algorithm.

In short, while both anonymisation and machine learning share similar characteristics, operate on the same kind of data, produce similar results and may even have similar implications, they are treated fundamentally differently in the discourse. Anonymisation techniques are primarily perceived and discussed as a solution, while machine learning techniques are mainly treated as a problem – neither of which can be explained by their respective characteristics. And maybe that’s what lies at the heart of the problem: there is a fundamental lack of reflection on what these techniques actually do, how they work, and what that implies. Instead, apart from a very small expert community, both the academic and the public debate is biased and treats “machine learning” and “anonymisation” primarily as empty signifiers, as markers for “problem” and “solution”. Unfortunately, that bias as well as the blind spots are then mirrored in the expert community. We say “unfortunately”, because they are the ones who frame what can and should be researched, who navigate how the issues should be addressed, and who limit what can be observed. Of course, expert-framing plays an essential role in science. But at least equally essential to science is the constant questioning and reassessing of the own methods and assumptions used in that framing.

tl;dr

Although data anonymisation and machine learning are treated as fundamentally different in public and scientific discourse, they have more in common than commonly assumed. We identify some of the main characteristics they share and thereby want to expose this “thematic bias” in the debate.

This post represents the view of the author and does not necessarily represent the view of the institute itself. For more information about the topics of these articles and associated research projects, please contact info@hiig.de.

You will receive our latest blog articles once a month in a newsletter.

Data governance

Polished yet impersonal: The unintended consequences of writing your emails with AI

AI-written emails can save workers time and improve clarity – but are we losing connection, nuance, and communication skills in the process?

AI at the microphone: The voice of the future?

From synthesising voices and generating entire episodes, AI is transforming digital audio. Explore the opportunities and challenges of AI at the microphone.

Do Community Notes have a party preference?

This article explores whether Community Notes effectively combat disinformation or mirror political biases, analysing distribution and rating patterns.