Making sense of our connected world

Turning back time for Content Moderation? How Musk’s takeover is affecting Twitter’s rules

Elon Musk’s takeover of Twitter marks a critical moment in the history of platforms. Within days, Musk moved to publicly question some of the policies that the platform has developed over the years. A closer look reveals that – at least until now – Twitter’s content policies have not changed. Yet, Musk’s leadership seems to aim at rolling back 15 years of learning and elaborating content moderation at Twitter and the industry.

The takeover of Twitter by Elon Musk has catapulted the platform into the centre of public attention. Within days after the completion of the acquisition, the newly declared CEO of the platform posted verified misinformation, let go of two-thirds of Twitter’s employees, re-instated Donald Trump on the platform following a Twitter poll on the topic and announced the introduction of a subscription model for verification. Rarely has the power that platforms wield over governing the public discourse received this much media attention.

A key way in which platforms exert this power is through content moderation – i.e. by writing and enforcing rules about which kind of content is allowed and prohibited on their services. As internet platforms have emerged as central venues for communication and interactions, and due to the often absence of other forms of regulation, the way in which they govern user activities has tremendous consequences for how our increasingly digital society is organised. This has been visible in controversies around issues such as hate speech and misinformation which point to the role and responsibility of platforms for the governance of public speech and communication dynamics.

Musk’s makeover of Twitter

Given Musk’s earlier vocal criticism of the stifling of free speech through Twitter’s content policies and his apparent announcement of policy changes via tweets, uncertainty arose among users, journalists and advertisers about the direction that the platform would take and how it would go about enforcing its prior policies. Further confusion ensued when online news site “The Hill” reported that Twitter had removed its misinformation policies from the Twitter Rules.

Musk attempted to calm the waves by assuring that “No major content decisions’‘ would be made before the formation of a new content moderation council and that “Twitter’s strong commitment to content moderation remains absolutely unchanged”. He also seemed eager to emphasise the continuing validity of the “The Twitter Rules” – Twitter’s central body of content policies – tweeting that “Twitter rules will evolve over time, but they’re currently the following”. However, even after this statement, Musk seemed to announce changes to Twitter’s policy on parody accounts in a tweet.

An intricate web of policies

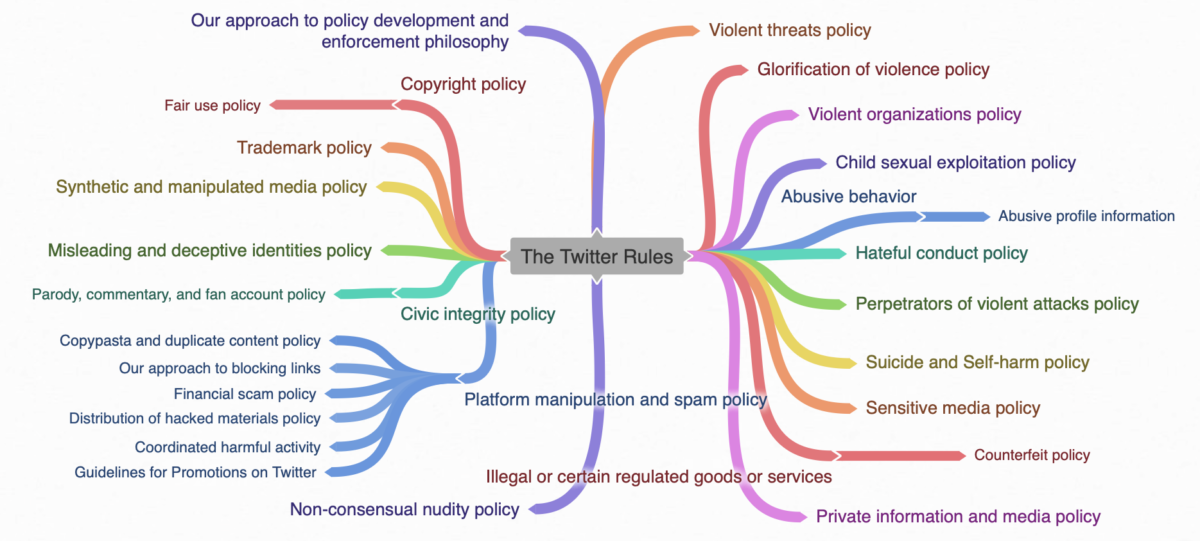

A closer look at the historical evolution of Twitter’s policies based on our database of platform policies – the Platform Governance Archive – can clear up some of the current confusion and provide valuable context for making sense of the platform’s current transformation. Twitter’s Community Guidelines, “The Twitter Rules”, first appeared on the site in January 2009, about two and a half years after Twitter was launched to the public. Since then, they have functioned as the central reference point for which kind of content and behaviour is allowed and forbidden on the platform, and Twitter often calls upon its users to check if their conduct is in line with the Twitter Rules.

Over the years, the size and scope of the Twitter Rules have grown enormously and they ballooned from a brief set of rules with 567 words (a one-page printout) into a complex web of policies with a total of 19,131 words that is spread across different URLs. The growth of Twitter’s Community Guidelines happened in waves and has picked up, especially since the second half of 2017 with increasing public and policy pressure. As of now, the Twitter Rules link to 19 sub-pages which explicate the policies referenced on the main page and which themselves link to further pages such as other policies, help pages and appeal forms.

The opacity of the Twitter Rules

To define exactly where the Twitter Rules begin and end is therefore by itself a difficult task, especially since Twitter has begun to create specific policies outside of the Twitter Rules such as a “Crisis misinformation policy” and a policy on COVID-related misinformation. All of these policies are listed on Twitter’s “Rules and policies” page. But even if a policy has been included in the Twitter Rules, it is important to note that the corresponding sites have sometimes existed before they became part of the Twitter Rules or continued to exist after they were removed from it. The inclusion of a policy into the Twitter Rules does therefore not equate to the inception or cessation of this policy.

This points to one of the complexities in understanding Twitter’s Community Guidelines – and platforms’ Community Guidelines more generally – which is that, unlike more legalistic policies such as the Terms of Service or the Privacy Policy, their limits are not explicitly defined. As the Twitter Rules evolved from a brief set of rules into an extensive rulebook with ever-more specific subsections which are spread across various URLs, it became increasingly harder for users to navigate the set of rules and understand when they have changed.

This is complicated by the fact that – unlike Twitter’s Terms of Service and Privacy Policy – the overarching Twitter Rules page does not include an effective date or a date of its last revision, although most of its subpages do. The company furthermore does not offer an archive of previous versions of the Twitter Rules such as those offered for its Terms of Service and Privacy Policy.

Twitter’s content policies remain unchanged

As part of our efforts to track and analyse platform policy changes at the Platform Governance Archive, we have been tracking every change of the Twitter Rules, i.e. the whole web of policies that they constitute, from the inception of the policy in 2009. Since Musk finalised his takeover of Twitter on October 27, none of these policies have changed. This also applies to the policy on parody accounts which Musk seemed to question in his tweets.

We also did not detect any change in the platform’s Terms of Service or Privacy policy in connection to Musk’s takeover. However, there has been an update to the “Twitter Purchaser Terms of Service” on November 9 which, among other things, introduced a clause that seemed aimed at preventing identity misrepresentation for the new subscription model.

Although Twitter’s rules for content moderation have not yet changed, they could at any minute and going forward it will be vital to track how they will evolve in connection to Musk’s takeover. Studying these changes from a historical perspective will then allow us to assess if and how Musk’s conception of the public sphere is reshaping the rules of the platform. Any change will therefore be closely monitored by us and soon published in an updated dataset of the Platform Governance Archive.

You cannot run social media like it’s 2008

Musk’s takeover of Twitter marks a critical moment in the formation of platforms that surfaces key issues of platforms, power, and governance. Among the many issues this is raising, we have highlighted here the aspect of platform policies. Looking at our archive, we see the long trajectory and development that Twitter’s policies have been taking in the 16-year existence of the platform. Following massive public, economic and policy pressure, Twitter has built up often elaborate policies on challenging issues such as misinformation and hate speech, and also expert teams to enforce policies and safeguard users.

While the core policies have not yet changed, the recent weeks have shown that Elon Musk does neither appreciate nor fully understand the complexity and importance of content moderation. He is in fact tweeting about content moderation like it was 2008. When insisting that Twitter is at heart a tech company, Musk is chiming in on a song that Mark Zuckerberg and other platform leaders have long abandoned – after singing it loudly in the first decade of social media. In those years, social media platforms understood that they needed to take responsibility for content and interactions on their site. That, in fact, content moderation “is the essence of platforms, it is the commodity they offer”, as Tarleton Gillespie has written in 2018 (Gillespie 2018, p. 204). Social media platforms have at least partly learned how to deliver in this role.

Rules without enforcement?

Musk now departs from all this drastically and impetuously. While the core policies might still be in place, the teams managing rules, safety, and enforcement at Twitter have been drastically reduced, if still existent at all. Those who remain at the company lack backing from leadership. This certainly casts doubt on the future of Twitter’s elaborated body of content policies and enforcement system and raises the question: What are all the rules worth if there is no one left to enforce them?

References

Gillespie, T. (2018). Custodians of the Internet: Platforms, Content Moderation, and the Hidden Decisions That Shape Social Media. United Kingdom: Yale University Press.

This post represents the view of the author and does not necessarily represent the view of the institute itself. For more information about the topics of these articles and associated research projects, please contact info@hiig.de.

You will receive our latest blog articles once a month in a newsletter.

Platform governance

The Human in the Loop in automated credit lending – Human expertise for greater fairness

How fair is automated credit lending? Where is human expertise essential?

Impactful by design: For digital entrepreneurs driven to create positive societal impact

How impact entrepreneurs can shape digital innovation to build technologies that create meaningful and lasting societal change.

Identifying bias, taking responsibility: Critical perspectives on AI and data quality in higher education

AI is changing higher education. This article explores the risks of bias and why we need a critical approach.