Making sense of our connected world

One step forward, two steps back: Why artificial intelligence is currently mainly predicting the past

Artificial intelligence is often hailed as the technology of the future. Yet it primarily relies on historical data, reproducing old patterns instead of fostering progress. This blog article examines how AI systems can reinforce societal inequalities and marginalisation and explores their impact on social justice. Can we create AI that moves beyond the biases of the past?

The increasing use of AI in many areas of our daily lives has intensified the discourse surrounding the supposed omnipotence of machines.[i] The promises surrounding AI fluctuate between genuine excitement about problem-solving assistants and the dystopian notion that these machines could eventually replace humans. Although that scenario is still far off, both state and non-state actors place great hope in AI-driven processes, which are perceived as more efficient and optimised.

Artificial Intelligence is ubiquitous in our daily lives, such as when it guides us directly to our destination or pre-sorts our emails. However, many are unaware that it is increasingly being used to make predictions about future events on various social levels. From weather forecasting to the financial markets and medical diagnoses, AI systems promise more accurate predictions and improved decision-making. These forecasts are based on historical training datasets that underpin AI technologies and on which they operate. More specifically, many AI systems are founded on machine learning algorithms. These algorithms analyse historical data to identify patterns and utilise these patterns to forecast the future for us. However, this also implies that the often-assumed neutrality or perceived objectivity of AI systems is an illusion. In reality, current AI systems, especially due to inadequate and incomplete training data, tend to reproduce past patterns. This further entrenches existing social inequalities and power imbalances. For instance, if an AI system for job applications is based on historical data predominantly sourced from a particular demographic (e.g., white, cisgender men), the system may preferentially favour similar candidates in the future. This amplifies existing inequalities in the job market and further disadvantages marginalised groups such as BIPoC, women, and individuals from the FLINTA/LGBTIQA+ communities.

Power imbalances and perspectives in the AI discourse – Who imagines the future?

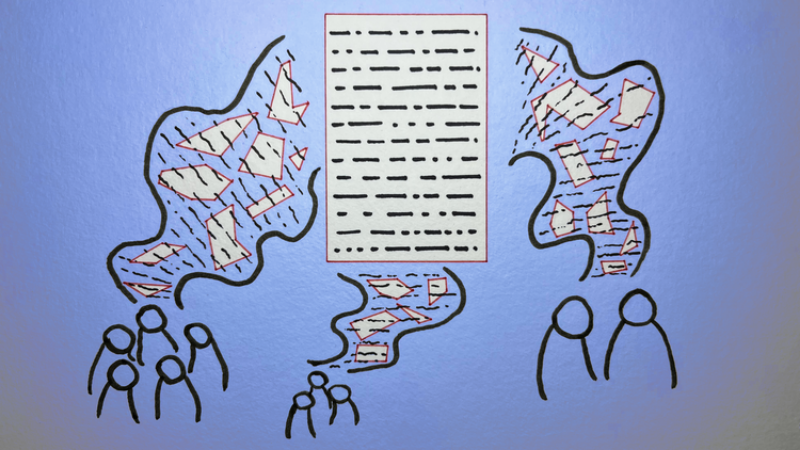

The discourse surrounding Artificial Intelligence is closely linked to social power structures and the question of who holds interpretative authority in this conversation. There exists a significant imbalance: certain narratives and perspectives dominate the development, implementation, and use of AI technology. This dominance profoundly impacts how AI is perceived, understood, and deployed. Thus, those who tell the story and control the narrative also wield the power to shape public opinion about AI. Several factors contribute to this so-called narrative power imbalance in the AI discourse.

In the current technological landscape, large tech companies and actors from the Global North often dominate the AI narrative. These entities possess the resources and power to foreground their perspectives and interests. Consequently, other voices, particularly from marginalised or minoritised groups, remain underrepresented and insufficiently heard. Additionally, the way AI is portrayed in the media simplifies its complexities, failing to do justice to the real impacts of AI on society.

The narrative power imbalance arises because the teams developing AI are frequently not diverse. When predominantly white, cisgender male perspectives from resource-rich regions influence development, other viewpoints and needs are overlooked. For example, AI systems used to evaluate individual creditworthiness often rely on historical data that reflect populations already granted access to credit, such as white, cisgender men from economically prosperous areas. This systematically disadvantages individuals from marginalised groups, including women, People of Colour, and those from low-income backgrounds. Their applications are less frequently approved because the historical data do not represent their needs and financial realities. This exacerbates economic inequalities and reinforces power dynamics in favour of already privileged groups. The same applies to the regulation and governance of AI: those who have the authority to enact laws and policies determine how AI should be regulated.

The past as prophecy: Old patterns and distorted data

As previously mentioned, AI systems often rely on historical data shaped by existing power structures and social inequalities. These biases are adopted by the systems and incorporated into their predictions. As a result, old inequalities not only persist but are also projected into the future. This creates a vicious cycle where the past dictates the future, cementing or, in the worst case, exacerbating social inequalities. The use of AI-based predictive systems leads to self-fulfilling prophecies, in which certain groups are favoured or disadvantaged based on historical preferences or disadvantages.

Rules for AI: Where does the EU stand?

The discourse on the political regulation of Artificial Intelligence often lags behind the rapid development of technology. After lengthy negotiations, the European Union has finally initiated the AI Act. Both UNESCO and the EU have previously highlighted the risks associated with AI deployment in sensitive social areas. According to the European Commission, AI systems could jeopardise EU values and infringe upon fundamental rights such as freedom of expression, non-discrimination, and the protection of personal data. The Commission states:

“The use of AI can affect the values on which the EU is founded and lead to breaches of fundamental rights, including the rights to freedom of expression, freedom of assembly, human dignity, non-discrimination based on sex, racial or ethnic origin, religion or belief, disability, age or sexual orientation, as applicable in certain domains, protection of personal data and private life, or the right to an effective judicial remedy and a fair trial, as well as consumer protection.” (European Commission 2020:11)

UNESCO also emphasises that gender-specific stereotypes and discrimination in AI systems must be avoided and actively combated (UNESCO 2021:32). To this end, UNESCO launched the “Women for Ethical AI” project in 2022 to integrate gender justice into the AI agenda. The UN AI Advisory Board similarly calls for a public-interest-oriented AI and highlights gender as an essential cross-cutting issue. However, in Germany, concrete regulations to prevent algorithmic discrimination in this field are still lacking, necessitating adaptations to the General Equal Treatment Act (AGG).

With the Artificial Intelligence Act, the European Union has taken a significant step towards AI regulation. Nevertheless, specific provisions for gender equality have yet to be firmly established. The legislation adopts a risk-based approach to ensure that the use of AI-based systems does not adversely affect people’s safety, health, and fundamental rights. The legal obligations are contingent on the risk potential of an AI system: unacceptable high-risk systems are prohibited, high-risk systems are subject to certain rules, and low-risk AI systems are not subject to any obligations. Certain applications for so-called social scoring and predictive policing are thus set to be banned. Social scoring refers to the assessment of individuals based on personal data, such as credit behaviour, traffic violations, or social engagement, to regulate their access to certain services or privileges. Predictive policing, on the other hand, employs AI to predict potential future crimes based on data analysis and take preventive measures. Both practices face criticism for potentially leading to discrimination, privacy violations, and social control. However, there are exceptions in the context of “national security” (AI Act 2024, Article 2).

Gaps in the AI Act

Critical voices, such as AlgorithmWatch, argue that while the AI Act restricts the use of facial recognition by law enforcement in public spaces, it also contains numerous loopholes (Vieth-Ditlmann/Sombetzki 2024). Although the recognition of the risks posed by “(unfair) bias” is mentioned in many parts of the legislation, it is inadequately addressed in concrete terms. Gender, race, and other aspects appear as categories of discrimination. However, a specific call to prevent biases in datasets and outcomes is found only in Article 10, paragraphs 2f) and 2g), concerning so-called high-risk systems. These sections demand “appropriate measures” to identify, prevent, and mitigate potential biases. The question of what these “appropriate measures” entail can only be answered through legal implementation. A corresponding definition is still pending, particularly given the significantly different systems. What conclusions can be drawn from this regulatory debate?

To prevent discriminatory practices from being embedded in AI technologies, the focus must be on datasets that represent society as a whole. The AI Act only vaguely addresses this in Article 10, paragraph 3, stating that data must be “sufficiently representative and, as far as possible, accurate and complete concerning the intended purpose.” However, these requirements allow for various interpretations.

One potential solution involves the use of synthetic data, which consists of artificially generated information simulating real data to fill gaps in the dataset and avoid privacy issues. Simultaneously, diversity in development teams should be promoted to prevent one-sided biases. Whether the AI Act will achieve the desired effects remains to be seen in practice.

The God Trick[ii]

The EU and other regulatory bodies have recognised that data must be representative, but this is not sufficient. The problem is twofold: on one hand, essential data points from marginalised groups are often missing from training datasets; on the other, these groups are disproportionately represented in certain societal areas, such as social benefits allocation. This creates an intriguing paradox and a further divergence in intersectional feminist critique. It is not only the absence of data points that is problematic but also the excessive inclusion of data from marginalised groups into other systems. Governments frequently possess far more data about poor or marginalised populations than about resource-rich, privileged sectors. This excessive collection leads to injustices.

„Far too often, the problem is not that data about minoritized groups are missing but the reverse: the databases and data systems of powerful institutions are built on the excessive surveillance of minoritized groups. This results in women, people of color, and poor people, among others, being overrepresented in the data that these systems are premised upon.” (D’Ignazio/Klein 2020:39)

An example of this is the system described by Virginia Eubanks in “Automating Inequality” in Allegheny County, Pennsylvania. An algorithmic model was designed to predict the risk of child abuse in order to protect vulnerable children. However, while the model collects vast amounts of data on poorer parents reliant on public services—including information from child protection, addiction treatment, mental health services, and Medicaid—such data is often absent for wealthier parents who utilise private health services. The result is that low-income families are overrepresented and disproportionately classified as risk cases, often leading to the separation of children from their parents. Eubanks describes this process as a conflation of “parenting in poverty” with “bad parenting.” This serves as yet another example of how biased data reinforces inequalities and further oppresses the most disadvantaged members of society.

In Europe, AI systems are increasingly deployed in sensitive areas that extend far beyond consumer protection. In Austria, the AMS Algorithm was intended to assist caseworkers in deciding which resources, such as training opportunities, should be allocated based on a calculated “integration chance.” Here, there is a risk that existing inequalities will be amplified by biased data, disadvantaging individuals with lesser chances in the job market. In Germany, the predictive policing system HessenData relies on the controversial software from the US company Palantir to predict crime. Such technologies are under scrutiny because they are often based on distorted datasets, which can result in marginalised groups, such as people of colour and low-income individuals, being disproportionately surveilled and criminalised. Both examples illustrate that the use of AI in such areas carries the risk of entrenching existing discrimination rather than reducing it.

These biases make it particularly challenging to create fair and representative AI systems. The regulation of such systems is complicated by the fact that privileged groups are often less intensely monitored and can more easily resist access to their data. Conversely, marginalised groups have little means to contest the use of their data or the discriminatory outcomes of such systems. In many cases, the processes in which AI is deployed are opaque, and avenues for challenge remain unclear. All of this indicates that regulation often lags behind technological developments, further entrenching existing power structures through AI technologies.

The Human in the Loop

In light of these challenges, the role of human oversight is increasingly brought to the fore in regulatory debates. A central approach of the AI Act is the call for “Human in the Loop” models, which are intended to ensure that humans are involved in the decision-making processes of AI systems. Article 14 of the AI Act stipulates that high-risk AI systems must be designed so that they can be effectively monitored during their use. It states:

“High-risk AI systems shall be designed and developed in such a way, including with appropriate human-machine interface tools, that they can be effectively overseen by natural persons during the period in which they are in use.” (AI Act, Article 14)

This reveals an interesting turn. The use of AI was initially justified as a solution to eliminate discrimination and to ensure non-discrimination. Simplistically put, the idea was that machines would be much better equipped to judge impartially and make decisions free from all discriminatory logics and societal practices. However, the preceding argument of this article has made it clear why this promise has yet to be fulfilled. Reality shows that human intervention remains essential to ensure that automated decisions do not continue to reproduce biases. But what requirements must these humans meet to make a meaningful intervention? What information do they need? At which points should they be involved? How can we ensure that this interaction is not only legally sound but also transparent and comprehensible?

The Alexander von Humboldt Institute for Internet and Society is actively contributing to addressing these challenges through its “Human in the Loop?” project. It explores new approaches to meaningfully involve humans in AI decision-making processes. Here, a fascinating crystallisation point emerges between the past and the future: while AI was originally conceived as a solution to eliminate biases, reality teaches us that human intervention remains necessary. Simultaneously, research into “Human in the Loop” models allows for the development of new approaches that redefine the interplay between humans and machines. This creates a tension at the intersection of old ways of working and future value creation, where humans and machines mutually vie for interpretative authority. This blog post offers a deeper insight into the still-open questions surrounding this human-machine interaction.

Remarks

[i] This contribution is based on reflections that I have already made here and on the blog of the Leibniz Institute for Media Research | Hans-Bredow-Institut (HBI).

[ii] The God Trick by Donna Haraway (1988) refers to the paradox of the supposedly omniscient. According to Haraway, a position that is fundamentally biased (mostly male, white, heterosexual) is generalised here under the guise of objectivity and neutrality. (This metaphor has often been referenced in discussions of technological development and data science, closely aligned with what AI suggests. Interesting thoughts on this context can be found in Marcus (2020).

References

Ahmed, Maryam (2024): Car insurance quotes higher in ethnically diverse areas. Online: https://www.bbc.com/news/business-68349396 [20.03.2024]

Biselli, Anna (2022): BAMF weitet automatische Sprachanalyse aus. Online: https://netzpolitik.org/2022/asylverfahren-bamf-weitet-automatische-sprachanalyse-aus/ [20.03.2024]

Boulamwini, Joy/Gebru, Timnit (2018): Gender Shades. Online: https://www.media.mit.edu/projects/gender-shades/overview/ [20.03.2024]

Bundesregierung (2020): Strategie Künstliche Intelligenz der Bundesregierung. Online: https://www.bundesregierung.de/breg-de/service/publikationen/strategie-kuenstliche-intelligenz-der-bundesregierung-fortschreibung-2020-1824642 [20.03.2024]

Deutscher Bundestag (2023): Sachstand. Regulierung von künstlicher Intelligenz in Deutschland. Online: https://bundestag.de/resource/blob/940164/51d5380e12b3e121af9937bc69afb6a7/WD-5-001-23-pdf-data.pdf [20.03.2024]

Europäische Kommission (2020a): EU Whitepaper. On Artificial Intelligence – A European approach to excellence and trust. Online: https://commission.europa.eu/document/download/d2ec4039-c5be-423a-81ef-b9e44e79825b_en?filename=commission-white-paper-artificial-intelligence-feb2020_en.pdf [20.03.2024]

Köver, Chris (2019): Streit um den AMS-Algorithmus geht in die nächste Runde. Online: https://netzpolitik.org/2019/streit-um-den-ams-algorithmus-geht-in-die-naechste-runde/ [20.03.2024]

Leisegang, Daniel (2023): Automatisierte Datenanalyse für die vorbeugende Bekämpfung von Straftaten ist verfassungswidrig. Online: https://netzpolitik.org/2023/urteil-des-bundesverfassungsgerichts-automatisierte-datenanalyse-fuer-die-vorbeugende-bekaempfung-von-straftaten-ist-verfassungswidrig/ [20.03.2024]

Lütz, Fabian (2024): Regulierung von KI: auf der Suche nach „Gender“. Online: https://www.gender-blog.de/beitrag/regulierung-ki-gender [20.03.2024]

Netzforma (2020): Wenn KI, dann feministisch. Impulse aus Wissenschaft und Aktivismus. Online: https://netzforma.org/wp-content/uploads/2021/01/2020_wenn-ki-dann-feministisch_netzforma.pdf [20.03.2024]

Pena, Paz/Varon, Joana (2021): Oppressive A.I.: Feminist Categories to Unterstand its Political Effect. Online: https://notmy.ai/news/oppressive-a-i-feminist-categories-to-understand-its-political-effects/ [20.03.2024]

Rau, Franziska (2023): Polizei Hamburg will ab Juli Verhalten automatisch scannen. Online: https://netzpolitik.org/2023/intelligente-videoueberwachung-polizei-hamburg-will-ab-juli-verhalten-automatisch-scannen/ [20.03.2024]

UN AI Advisory Board (2023): Governing AI for Humanity. Online: https://www.un.org/techenvoy/sites/www.un.org.techenvoy/files/ai_advisory_body_interim_report.pdf [20.03.2024]

UNESCO (2021): UNESCO recommendation on Artificial Intelligence. Online: https://www.unesco.org/en/articles/recommendation-ethics-artificial-intelligence [20.03.2024]

UNESCO (2022): Project Women4Ethical AI. Online: https://unesco.org/en/artificial-intelligence/women4ethical-ai [20.03.2024]

Legislative Entschließung des Parlaments vom 13.03.2024 zu dem Vorschlag für eine Verordnung des Europäischen Parlaments und des Rates zur Festlegung harmonisierter Vorschriften für Künstliche Intelligenz und zur Änderung bestimmter Rechtsakte der Union (2024), TA (2024)0138. Online: https://www.europarl.europa.eu/RegData/seance_pleniere/textes_adoptes/definitif/2024/03-13/0138/P9_TA(2024)0138_DE.pdf [27.03.2024]

D’Ignazio, Catherin/Klein, Lauren F. (2020): Data Feminism. MIT Press.

Eubanks, Virginia (2018): Automating Inequality – How High-Tech Tools Profile, Police, and Punish the Poor. St. Martin’s Press.

Haraway, Donna (1988): Situated Knowledges: The Science Question in Feminism and the Privilege of Partial Perspective. In: Feminist Studies, 14. Jg, Heft 3, S. 575-599.

Koenecke, Allison et al. (2020): Racial disparities in automatic speech recognition. In: Proceedings of the National Academy of Sciences, Jg. 117, Heft 14, S. 7684-7689.

Kolleck, Alma/Orwat, Carsten (2020): Mögliche Diskriminierung durch algorithmische Entscheidungssysteme und maschinelles Lernen – ein Überblick. Online: https://publikationen.bibliothek.kit.edu/1000127166/94887549 [20.03.2024]

Rehak, Rainer (2023): Zwischen Macht und Mythos: Eine kritische Einordnung aktueller KI-Narrative. In: Soziopolis: Gesellschaft beobachten.

Varon, Joana/Peña, Paz (2021): Artificial intelligence and consent: a feminist anti-colonial critique. In: Internet Policy Review. 10 (4). Online: https://policyreview.info/articles/analysis/artificial-intelligence-and-consent-feminist-anti-colonial-critique [12.03.2024]

West, Sarah Meyers, Whittaker, Meredith and Crawford, Kate (2019): Discriminating Systems: Gender, Race and Power in AI. AI Now Institute. Online: https://ainowinstitute.org/discriminatingsystems.html [20.03.2024] Zur intersektionalen feministischen Kritik am AI Act – FemAI (2024): Policy Paper – A feminist vision for the EU AI Act. Online: https://www.fem-ai-center-for-feminist-artificial-intelligence.com/_files/ugd/f05f97_0c369b5785d944fea2989190137835a1.pdf [08.04.2024]

This post represents the view of the author and does not necessarily represent the view of the institute itself. For more information about the topics of these articles and associated research projects, please contact info@hiig.de.

You will receive our latest blog articles once a month in a newsletter.

Artificial intelligence and society

Why access rights to platform data for researchers restrict, not promote, academic freedom

New German and EU digital laws grant researchers access rights to platform data, but narrow definitions of research risk undermining academic freedom.

Empowering workers with data

As workplaces become data-driven, can workers use people analytics to advocate for their rights? This article explores how data empowers workers and unions.

Two years after the takeover: Four key policy changes of X under Musk

This article outlines four key policy changes of X since Musk’s 2022 takeover, highlighting how the platform's approach to content moderation has evolved.