Unsere vernetzte Welt verstehen

NetzDG-Berichte: Entfernung von Online-Hassrede in Zahlen

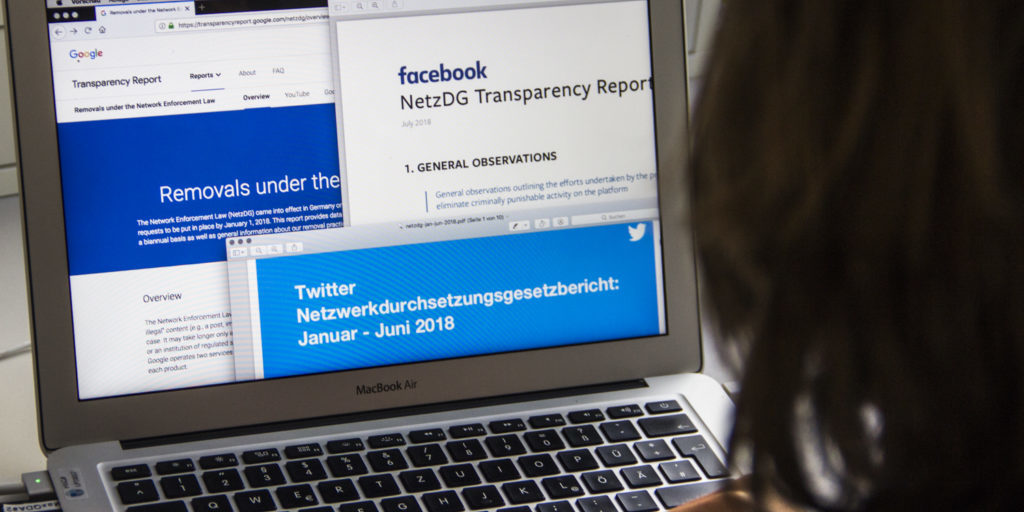

Sechs Monate nach Inkrafttreten des sogenannten Netzwerkdurchsetzungsgesetzes (NetzDG) werden soziale Onlineplattformen nun beauftragt, über die Entfernung illegaler Hassrede öffentlich zu berichten. HIIG Forscherin Kirsten Gollatz, HIIG-Fellow Martin J. Riedl sowie Jens Pohlmann haben sich die Berichte genauer angeschaut: Was können wir aus diesen Zahlen lernen?

CORRECTION: An earlier version of this blog article incorrectly referred to YouTube being “flooded with complaint notices, but [that it] only identified a need to take action in roughly 11 percent of reported pieces of content.” The reference to YouTube ought to have been to Twitter. This has now been corrected in the text below.

In June 2017, the German parliament passed a federal law, which requires social media platforms to implement procedures that allow users to report illegal content. According to the Network Enforcement Act[1], also known as NetzDG, platforms are tasked to publish reports on how they deal with these complaints. The law came into force on October 1, 2017 and took full effect on January 1, 2018 after a transition period. If platforms systematically fail to establish and enforce such reporting complaint management systems, they can be fined with penalties of up to 50 Million Euros based on the law.

At the end of July, Twitter, Facebook and Google released their figures for the first time. While the numbers will bring public attention to platforms’ content moderation practices in the short term, it is yet unclear as to what effects the newly-established infrastructure for removing problematic content will have in the long haul. Will the largest platforms act more proactively when detecting and removing user content and accounts? The question that lies at the core of these recent reports is this: Do figures indicate ‘overblocking’, a concern that had previously been articulated by non-governmental organisations such as Human Rights Watch, the rapporteur on freedom of opinion and expression of the United Nations, and civil society organisations? Or do figures even suggest that the NetzDG establishes a form of institutionalised pre-censorship?

TL;DR: The bare numbers don’t tell much, but they matter anyway.

NetzDG, the risk of ‘overblocking’ and other concerns

In the wake of ongoing spread of hateful content on social media platforms, more and more governments pressure companies to take action and proactively detect and remove problematic content. In 2015, then German Federal Minister of Justice and Consumer Protection Heiko Maas formed a task force with representatives from large platforms to improve the ways in which companies handle illegal hate speech. However, the group’s efforts could not stop the heated debate surrounding “fake news” and “hate speech”. The two terms were at the center of a highly politicised discourse, which eventually prompted a shift towards a legislative solution. In the spring of 2017, this culminated in a draft law. In June 2017, and in spite of harsh criticism, the draft was accepted by the German parliament – albeit in a watered-down version.

Concerns have been raised on multiple levels since the law first appeared in draft form. Repeatedly, critics have expressed fears that the law would undermine freedom of expression by delegating the determination of whether certain speech is unlawful to private companies. Experts have argued that the draft law is at odds with human rights standards[2] and German constitutional law. Civil and human rights organisations as well as industry bodies in the ICT sector called on the EU Commision to ensure compliance with EU law fearing that outsourcing decisions about the legality of speech to private corporations would incentivise the use of automated content filters. David Kaye, United Nations Special Rapporteur on freedom of opinion and expression, raised further concerns: He argued that decisions about the legitimacy of content would in many cases require an in-depth assessment of the context of speech, something social media companies would not be able to provide. Human Rights Watch and other international critics also opposed the NetzDG because, according to them, it would set a precedent for governments around the world to restrict online speech.

The law again and again triggered fears of ‘overblocking’, which means that content that is lawful or should be considered acceptable is mistakenly and/or preventively banned. Short turnaround times have been at the center of the critique, since time remains the most significant factor when determining the legality of content. According to Article 1, Section 3 of the NetzDG, platforms must remove or block access to content that is “manifestly unlawful” within 24 hours of receiving the complaint, and furthermore, to “all unlawful content” within a 7-day period. The final version of the law aims to minimise the risk of ‘overblocking’ by allowing platform operators to exceed the 7-day time limit in more complicated cases. Particularly, if factual allegations are necessary, or if decisions are referred to self-regulatory institutions for external review. Nonetheless, the basic principle remains: while the law imposes drastic fines in the event that platforms do not detect and remove illegal content sufficiently within the given time frame, there are no legal consequences should platforms remove more content than legally necessary.

A closer look at the numbers

Do the reports that social media platforms released in July provide data that can substantiate the risk of ‘overblocking’? Intended as a measure to better hold platforms accountable and to increase transparency, Article 1 Section 2 of the law mandates all commercial social media platforms which receive more than 100 complaints about unlawful hate speech per year to publish a report every six months.

Table 1: Reported numbers by selected platforms, January – June 2018

Platform | Total items reported | Reports resulted in action | Removal rate within 24h |

| 1,704 | 362 (21.2 percent) | 76.4 percent (of reports) | |

| YouTube | 241,827 | 58,297 (27.1 percent) | 93.0 percent (54.199) |

| Google+ | 2,769 | 1,277 (46.1 percent) | 93.8 percent (1.198) |

| 264,818 | 28,645 (10.8 percent) | 97.9 percent (28.044) |

The table above provides an overview of the total number of items mentioned in respective transparency reports during the six-month reporting period as well as the percentage of cases in which platforms have complied with the complaints and subsequently removed or blocked content.

What are the key takeaways? Since the law took full effect in January, Facebook, Twitter and Google’s YouTube and G+ said they had blocked tens of thousands of pieces of content – mostly within 24 hours as is mandated by the law. Noticeably, all platforms responded to most of the reported content that required action within one day, and deleted or blocked access. However, by a large majority platforms rejected the complaints they received from users. Especially Twitter was flooded with complaint notices, but only identified a need to take action in roughly 11 percent of reported pieces of content.

Can we notice signs of ‘overblocking’? Answer: It depends on who you ask.

Critics have claimed the NetzDG would encourage social media platforms to err on the side of caution, by blocking non-illegal content in order to avoid fines. The numbers are now up for interpretation by various parties.

After the reports had been released, Gerd Billen, State Secretary in the German Ministry of Justice, expressed satisfaction that the law was having its intended effect. „Nevertheless, we are only at the very beginning“, he added in a statement to German news agency dpa. Members of the governing parliamentary group CDU/CSU shared his opinion and did not see any evidence of ‘overblocking’ yet.

However, previous opponents of the law see their concerns proven true and argue that the law indeed leads to false removals of content. Among them are industry representatives who see concerns to be justified because of the required speed of examining content when it should rather be a matter of careful consideration, as a Google spokesperson said. Further criticism addresses the lack of public oversight over a platform’s decision-making and removal practices. Reporters Without Borders’ manager director Christian Mihr argues that the installation of “an independent control authority is necessary to recognise ‘overblocking’, that is, the deletion of legally permitted content.” And there continues to be even more fundamental criticism of the law with regard to due process, since the law does not provide an effective mechanism for users to object to the deletion or blocking of their content or their account.

Discussion and trajectories for research

Pushing platforms for more transparency with regard to their content moderation practices has long been a major concern. As such, providing numbers on content takedown requests and complaints is useful and can help inform public discussion making, instigate new advocacy projects and policy initiatives. The numbers are now up for debate, and may also prompt new research. Here are some critical questions that should be pondered upon:

What do numbers in reports actually tell?

Based on statistical reports, indexes and rankings we know that bare numbers have flaws, yet they are being interpreted and weaponised by various stakeholders. The aggregated numbers that Facebook, Twitter and Google have produced and disclosed are difficult to verify because researchers don’t have access to the “raw data”. Often times, reports on numbers conceal more than they reveal, as they eliminate important information on context and intent. What’s more, the current reports do not provide a valuable measure to compare performance between platforms, since each of the reports follows its own organising structure. At this time, platforms have developed their distinct reporting practices which also shape what comes to be presented as transparent, knowable and governable. It is, and we should point this out, also a fallacy of German lawmakers that transparency reports look the way they do. Still, if no standardised reporting practices exist and users have to go through different hurdles on each platform to file a request, then what do the numbers actually reveal?

How does the NetzDG affect reporting behavior of users, and furthermore, the content moderation of platforms?

If we want to better understand how companies make decisions about acceptable and unacceptable speech online, we need a more granular understanding of case-by-case determinations. This would allow to learn more about the scale and scope of policies and practices, and consequently, it would enable us to understand the ways in which the current content moderation systems are affected by NetzDG. Who are the requesters for takedowns, and how strategic are their uses of reporting systems? How do flagging mechanisms affect user behavior? And furthermore, does the NetzDG offer the ground for new reporting mechanisms of content governance online, including a powerful rhetorical legitimation for takedowns? Platforms should team up with social scientists and let academics survey users on questions like perceived chilling effects and self censorship prior to posting.

How does NetzDG affect public perception and debates on online hate speech in the long run?

Transparency efforts in form of quantitative measures are promoted as a solution to governance problems. Disclosing timely information to the public is believed to shed light on these problems for purposes of openness, accountability, and creating trust. However, balancing free speech principles and protection of users against hateful content requires more than just mere numbers. It is a highly complex and contextual undertaking for society at large. Thus, making sense of the numbers is a shared responsibility among all actors. For instance, what would an increase in numbers in the next reports mean? We simply don’t know. It could be that communication has become more hateful and that the law is not very effective in preventing this from happening. It could also indicate that more complaints have been filed, or that companies are just getting better in their removal practice. Furthermore, what these reports do not indicate is whether there is a change in public discourse, its structure and intensity towards hate speech. And this may be one of the larger effects of laws like the NetzDG in society.

Does the NetzDG affect how platforms will formulate and enforce own community standards globally?

Far from just publishing a few figures, these reports matter! We should be aware that the numbers they contain, how they are produced, disclosed and interpreted have governing effects by creating a field of visibility[3]. They may affect how users, policy makers and the platforms themselves perceive and define the problem, and what solutions they consider to be acceptable. The NetzDG directs attention to unlawful online hate speech in the German context, while at the same time leaving other issues in the dark. In this vein, there has long been a demand for platforms to become more transparent with regard to the extent to which they themselves shape and police the flow of information online. To date, not much is known, for instance, about how platforms enforce their own rules on prohibited content. With the NetzDG this becomes an even more salient issue to ask. Are we correct in assuming that platforms first and foremost control content on the basis of their policies, and only then according to the NetzDG, as the recent debate has put forward? Such a practice would raise the question whether the provisions the NetzDG entails will nudge platforms to adjust their own content policies accordingly, so that they can more easily, and without any public oversight, police content. Does the NetzDG also play a role in the convergence of community standards across platforms’ global operations?

Will the NetzDG set a precedent internationally?

Finally, numbers can easily “travel” across borders. NetzDG reports provide figures that describe a current situation in the German context. While such transparency measures start locally, they often diffuse regionally and even globally. As such, the German NetzDG law must be seen in the context of transnational challenges of cross-border content regulation. In the absence of commonly agreed upon speech norms and coherent regulatory frameworks, speech regulation has become a pressing issue on the Internet. Governments around the world initiate regulatory policies to restrict online speech they deem to be unlawful, among them France, Vietnam, Russia, Singapore, Venezuela[4]. As Germany has provided a blueprint for a national legal solution that formalizes content removal practices by private corporations, other countries may follow.

To conclude, transparency reports about content removals according to the German NetzDG are not the endpoint of this discussion. Instead, they inform the debates to reach beyond Germany, politicise how global platforms govern speech online, and provoke new questions.

[1] Act to Improve Enforcement of the Law in Social Networks (Network Enforcement Act).

[2] Schulz, W., Regulating Intermediaries to Protect Privacy Online – the Case of the German NetzDG (July 19, 2018).

[3] Flyverbom, M., Transparency: Mediation and the Management of Visibilities, International Journal of Communication 10 (2016), 110-122.

[4] See for an overview of developments internationally.

Dieser Beitrag spiegelt die Meinung der Autor*innen und weder notwendigerweise noch ausschließlich die Meinung des Institutes wider. Für mehr Informationen zu den Inhalten dieser Beiträge und den assoziierten Forschungsprojekten kontaktieren Sie bitte info@hiig.de

Jetzt anmelden und die neuesten Blogartikel einmal im Monat per Newsletter erhalten.